Elasticsearch 是一个分布式、RESTful 风格的搜索和数据分析引擎,能够解决不断涌现出的各种用例。 作为 Elastic Stack 的核心,它集中存储您的数据,帮助您发现意料之中以及意料之外的情况。

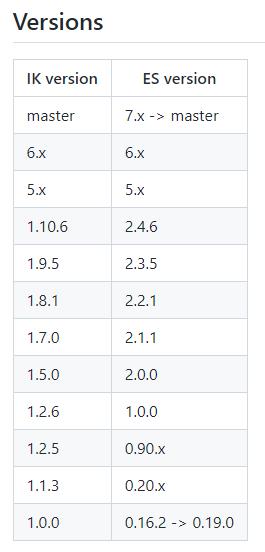

IK Analysis for Elasticsearch下载与文档,这里是国内代理节点

https://gitcode.net/mirrors/medcl/elasticsearch-analysis-ik

https://github.com/medcl/elasticsearch-analysis-ik

IK分词器扩展词停用词

http://www.javacui.com/tool/631.html

The IK Analysis plugin integrates Lucene IK analyzer (http://code.google.com/p/ik-analyzer/) into elasticsearch, support customized dictionary.

Analyzer: ik_smart , ik_max_word , Tokenizer: ik_smart , ik_max_word

这里下载了elasticsearch-analysis-ik-8.2.0版本,将下载文件解压到elasticsearch-8.2.2\plugins下,新建一个文件夹ik,将文件放到里面。

注意,因为前面我一直用的ES是8.2.2,因此需要修改下IK的配置来适配,修改配置文件plugin-descriptor.properties

# 'elasticsearch.version' version of elasticsearch compiled against # You will have to release a new version of the plugin for each new # elasticsearch release. This version is checked when the plugin # is loaded so Elasticsearch will refuse to start in the presence of # plugins with the incorrect elasticsearch.version. elasticsearch.version=8.2.2

修改为ES安装的版本

重启后测试一下,在kibana工具中输入

POST /_analyze

{

"text": "你好,我知道了,中国是一个伟大的祖国,我爱你",

"analyzer": "ik_smart"

}返回结果

{

"tokens" : [

{

"token" : "你好",

"start_offset" : 0,

"end_offset" : 2,

"type" : "CN_WORD",

"position" : 0

},

{

"token" : "我",

"start_offset" : 3,

"end_offset" : 4,

"type" : "CN_CHAR",

"position" : 1

},

{

"token" : "知道了",

"start_offset" : 4,

"end_offset" : 7,

"type" : "CN_WORD",

"position" : 2

},

{

"token" : "中",

"start_offset" : 8,

"end_offset" : 9,

"type" : "CN_CHAR",

"position" : 3

},

{

"token" : "国是",

"start_offset" : 9,

"end_offset" : 11,

"type" : "CN_WORD",

"position" : 4

},

{

"token" : "一个",

"start_offset" : 11,

"end_offset" : 13,

"type" : "CN_WORD",

"position" : 5

},

{

"token" : "伟大",

"start_offset" : 13,

"end_offset" : 15,

"type" : "CN_WORD",

"position" : 6

},

{

"token" : "的",

"start_offset" : 15,

"end_offset" : 16,

"type" : "CN_CHAR",

"position" : 7

},

{

"token" : "祖国",

"start_offset" : 16,

"end_offset" : 18,

"type" : "CN_WORD",

"position" : 8

},

{

"token" : "我爱你",

"start_offset" : 19,

"end_offset" : 22,

"type" : "CN_WORD",

"position" : 9

}

]

}它已经正常工作,但是有个问题,中文的词汇分析并不友好和智能,这里就需要自行扩展自己的词汇库了。

在IK分词器扩展词停用词文章的后面,已经说了如何把搜狗的官方词汇导入为自己的词汇库,可以参考。

END